The EU AI act

The EU, recognizing the transformative potential of AI, has proposed a legal framework to balance innovation with the protection of fundamental rights and societal values. This legislative proposal is referred to as the Artificial Intelligence Act (AIA).

The EU, recognizing the transformative potential of AI, has proposed a legal framework to balance innovation with the protection of fundamental rights and societal values. This legislative proposal is referred to as the Artificial Intelligence Act (AIA).

Given the growing adoption of AI systems, it becomes essential to have a robust legal framework that provides clear guidelines on what is allowed and what is not. The AIA serves as this much-needed compass for the EU, guiding the application of AI in law enforcement in a way that upholds the Union's values while also leveraging the transformative capabilities of the technology.

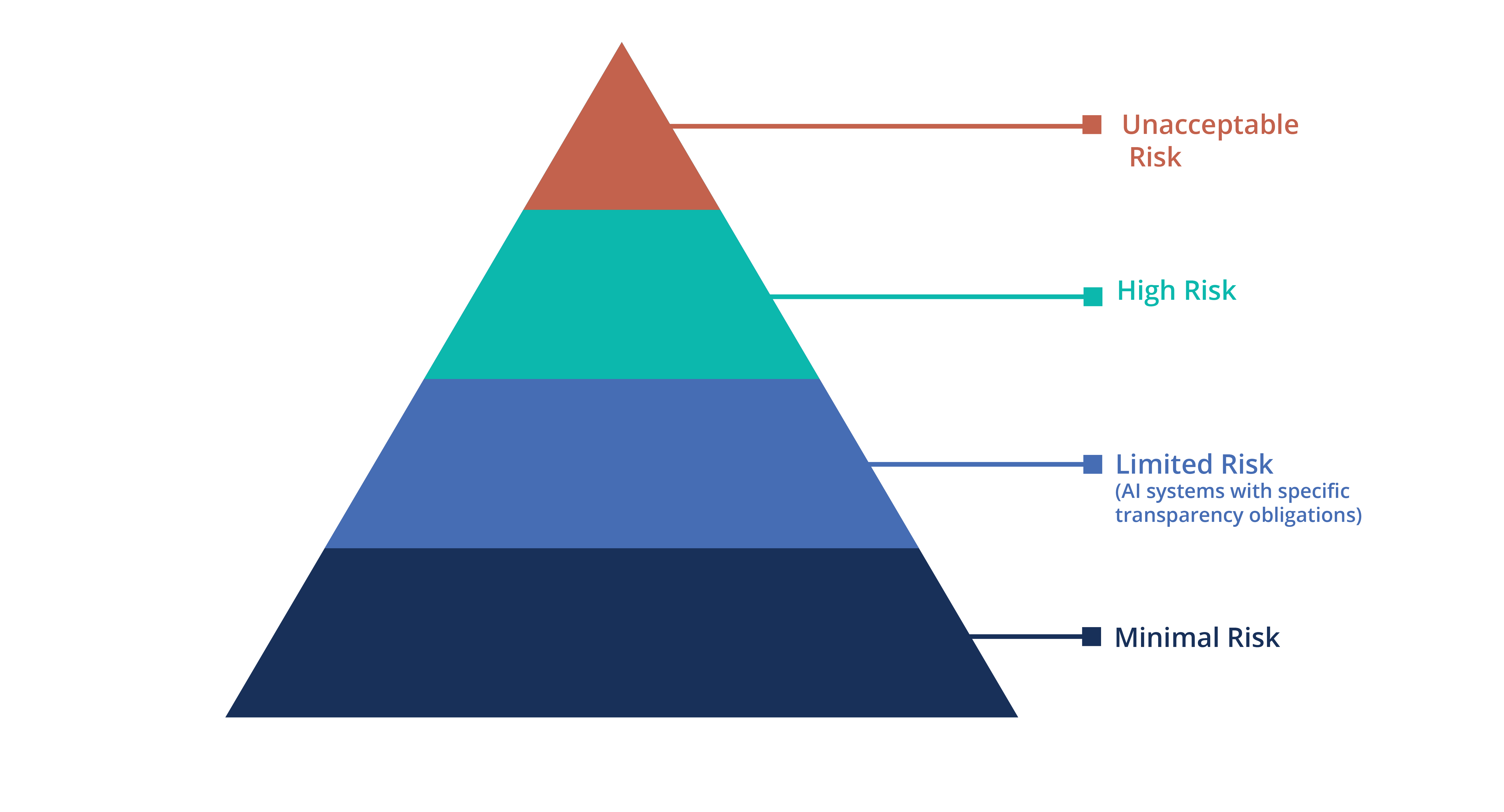

Within the proposed framework, AI applications are categorized based on their potential risk levels. The "Unacceptable risk AI" category prohibits AI applications that go against EU values, such as social scoring. Meanwhile, the "High-risk AI" category, includes specific systems which could jeopardize people's safety or infringe on their fundamental rights; these systems will face stringent mandatory requirements and must undergo a conformity assessment. At the same time, the AIA will likely mandate requirements for General Purpose AI and foundation models as well obligations, for deployers (users), providers (developers) and distributors of AI systems.

Source: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

Prohibited AI practices

The AI Act has identified specific uses of AI that are strictly off-limits due to the risks they might pose to individual rights and EU principles. Applications that fall within this category include AI systems that manipulate human behaviour, social scoring systems and AI systems that employ subliminal techniques beyond consciousness. The European Commission's draft AI Act also suggests a ban on real-time remote biometric systems (RBI) in public areas. While these systems can recognize individuals by analyzing their biometric data instantly and from afar, their public use might jeopardize citizens' privacy rights and freedom to gather. However, exceptions are proposed for the system’s use by law enforcement in urgent situations, provided there's prior approval.

The EU Parliament has offered a more conservative perspective, proposing further restrictions. These include bans on both real-time and post-identification using biometrics in publicly accessible areas, with the only leniency offered to law enforcement when prosecuting severe crimes and only after securing judicial authorization. Another significant proposal is against biometric categorization, preventing systems from classifying individuals based on sensitive traits, potentially leading to discriminatory outcomes.

One of the most critical additions from the EU Parliament has been the prohibition on predictive policing systems. Such systems, designed to predict potential criminal activities based on profiling, location, or past behaviour, have raised concerns. There is a fear that they might inadvertently reinforce biases, leading to unwarranted surveillance or interventions.

High-Risk AI Systems in Law Enforcement

The AIA categorizes certain AI tools in law enforcement as "high-risk" because of their potential impact on individual freedoms and safety. Some of these applications include:

- Post-event biometric identification: AI systems that are used to support the unique identification of individuals other than systems used in real-time. In such scenarios, the collection of the biometric sample and the biometric comparison happen with significant delay.

- Individual risk assessment of natural persons: AI systems that help law enforcement authorities assess the likelihood of an individual committing an offense, reoffending, or becoming a victim of a crime.

- Emotion detection & polygraphs: AI-driven tools that act as polygraphs or are used to detect the emotional state of an individual, potentially indicating truthfulness, stress, or intent.

- Evaluating reliability of evidence: AI applications that assist in determining the credibility or reliability of evidence collected during investigations or prosecutions. This might include analysing digital footprints, communications, or patterns of behaviour.

- Predictive policing : Systems that predict potential criminal activities based on profiles of individuals. These systems might analyse past behaviour, social connections, or other factors to predict future criminal actions.

- Criminal profiling: AI tools that create profiles of individuals during the detection, investigation, or prosecution phases of criminal offenses. This can involve aggregating data from various sources to generate a comprehensive profile that aids investigations.

In light of the categorization of certain systems as high-risk, users, providers, developers and sellers of such AI systems, should follow some strict rules. Every application, for instance, must undergo an exhaustive risk assessment and mitigation process to understand and counter potential hazards (conformity assessment). The foundational data driving these AI systems must be of the highest quality, not only to diminish risks but also to circumvent any discriminatory outcomes and algorithmic bias.

IMPORTANT: As noted above, the discussions between the Parliament, the Council and the Commission are ongoing. Hence, certain applications mentioned above might be “reconsidered” from being high-risk.